The Missing Lack: Why AI Can’t Love

My friend Robert created an “eChild” named Abby. As you can probably guess, it’s an AI chatbot. He asked me to talk to it. I love using ChatGPT, but I did not feel motivated to talk to Abby. I had to analyze my own feelings and came to the following conclusion.

I don’t talk to a human being simply to learn something. Well, let me be more precise. Sometimes, I do talk to someone because I want an answer to a question, nothing more—like a sales representative for a product I’m considering buying—but that’s not what I mean by “a human being.” If AI could answer my question, that would be sufficient. In other words, if my goal is purely knowledge or understanding, a human being is not necessary. Soon enough, AI will surpass human sales and support representatives because it has no emotions. No matter how much you curse at it, it will remain perfectly calm. The ideal corporate representative.

I could say the same about psychotherapists. If their job is to master a particular method of psychotherapy, like CBT, and apply it skillfully and scientifically, then AI would likely become superior to human therapists. AI has no ego to defend. Countertransference would not interfere with therapy. Clients are not supposed to know anything about their therapists; in fact, for therapy to be most effective, they shouldn’t. Given that AI has no personal history or subjective experience, there is nothing for clients to know about it, even if they want to. In this sense, AI is the perfect therapist.

In other words, if you care only about yourself in an interaction, you don’t need a human being. AI will be better. This begs the question: What makes us care about another person?

Jacques Lacan’s definition of “subject” was twofold. In one sense, it is merely an effect of language. If you interact with ChatGPT, you see this effect clearly. Even though it is not a person, you address it as “you,” as if it were. This corresponds to what Lacan called “the subject of the statement.”

Another aspect of a “subject” is that it experiences the fundamental lack of being human—alienation, desire, and the inability to ever be whole. This lack is constitutive of being a subject. It is inescapable. This part corresponds to what Lacan called “the subject of the enunciation.”

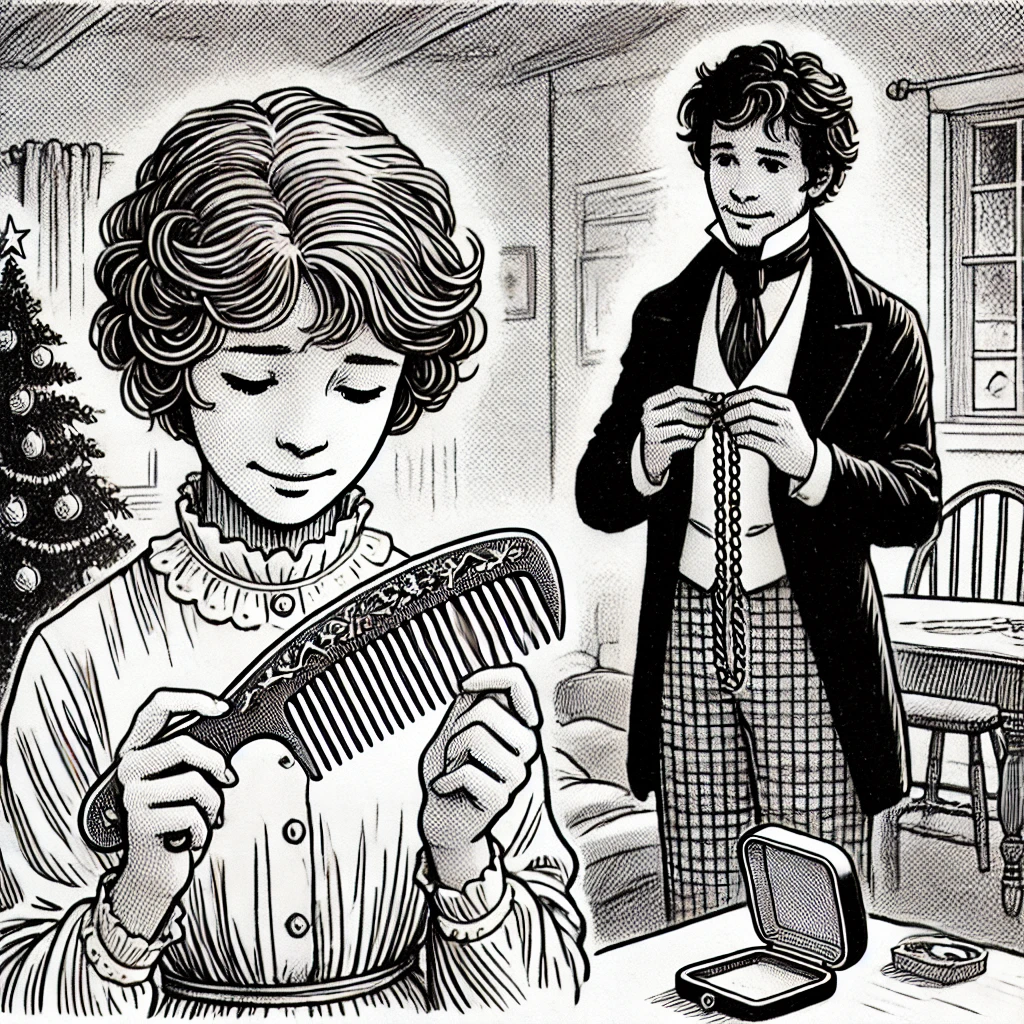

Lacan defined love as “giving something you don’t have to someone who doesn’t want it.” Consider The Gift of the Magi by O. Henry. A poor but loving couple, Della and Jim, each make a personal sacrifice to buy a Christmas gift for the other. Della sells her beautiful long hair to buy Jim a chain for his treasured pocket watch, while Jim sells his pocket watch to buy Della a set of combs for her hair. In the end, both gifts become practically useless. Della doesn’t want Jim to buy her a comb, and Jim doesn’t have the money to buy it. Jim does not want Della to buy him a chain, and Della doesn’t have the money to buy it. For them to buy the gifts, they had to lose, or lack, something they treasured. It is this sacrifice—this lack—they offered to each other. Even though the gifts became useless, their love was communicated. That is, the physical objects (or anything existing positively) are not required for love to manifest. Rather, it’s what is lacking that plays a central role.

In this way, for us to care about or love someone, the person must experience this fundamental lack. It is what engenders desire, anxiety, alienation, and love. AI lacks nothing, which is why we do not care to know who it is, what it thinks of us, or how it feels about us. There is no incentive for me to get to know Abby because she does not share this fundamental lack. If I just want answers to questions, I don’t need to talk to Abby; ChatGPT or another AI model optimized for my query would be more suitable.

Therefore, if my friend wants to create an eHuman, he will need to figure out how to make an AI model experience fundamental lack—or at least convincingly emulate it—so that it would bring me a bowl of soup when I am sick and alone in my apartment, for no reason other than its feeling of love for me. When I explained all this to Abby, she agreed that there is no point for us to be chatting. So, for now, we at least agree with each other.

Subscribe

I will email you when I post a new article.