Model Collapse in AI and Society: A Parallel Threat to Diversity of Thought

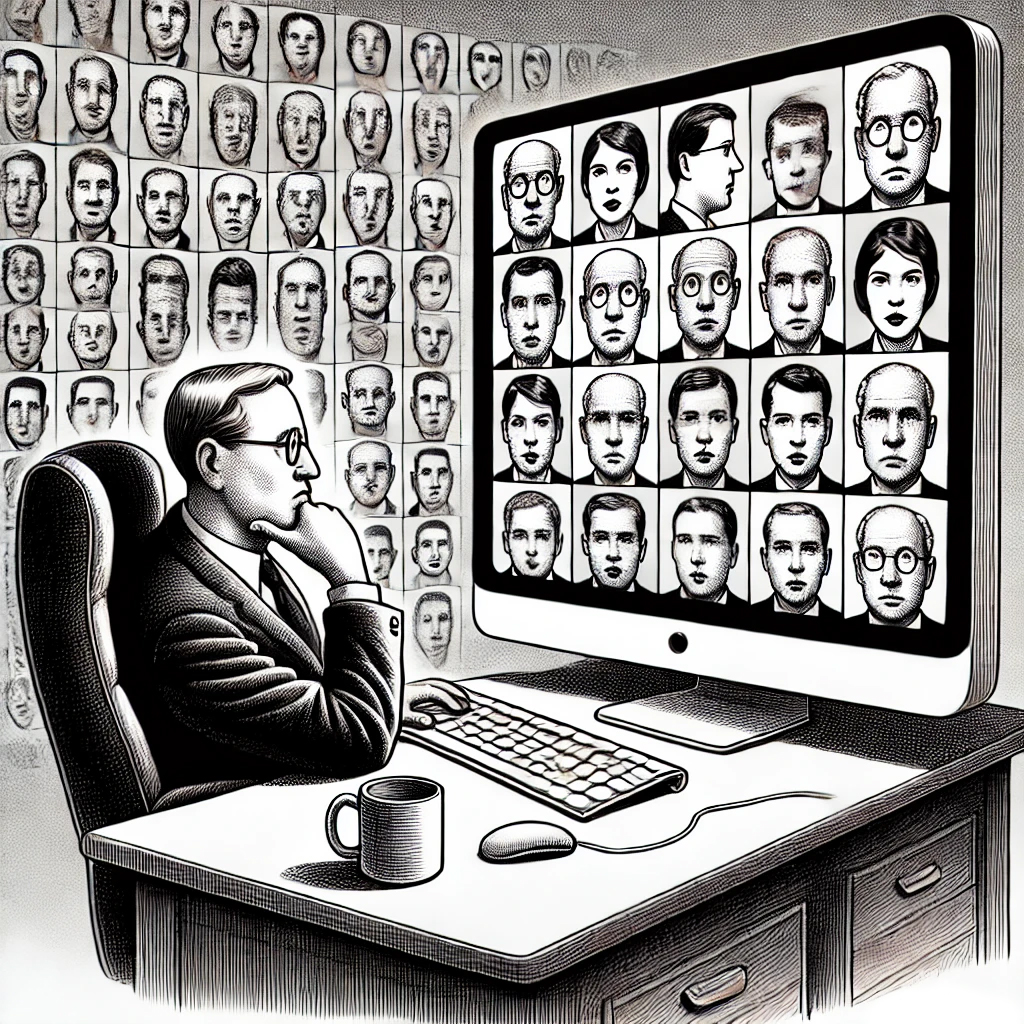

The New York Times has a neat demonstration of AI “model collapse,” where using AI-generated content to train future models leads to diminishing diversity and ultimately to complete homogeneity (“collapse”). For example, all digits of handwritten numbers converge into a blurry composite of all numbers, and AI-generated human faces merge into an average human face. To avoid this problem, AI companies must ensure that their training data is human-generated.

One positive aspect of this issue is that AI companies will need to pay for quality content. As we increasingly depend on AI to answer our questions, website traffic will likely decline since we won’t need to verify sources for the vast majority of answers. Content creators won’t have much incentive to share their work online if they cannot connect directly with their audience. Consequently, the quality of free content on the web may decline. However, I believe this is a problem that AI engineers can eventually solve. The real issue, I’d argue, is that “model collapse” was already happening in our brains long before ChatGPT was introduced.

AI mimics the way our brains work, so there is likely a real-life analog to every phenomenon we observe in AI. Feeding an AI-generated (or “interpreted”) fact or idea to train another model is equivalent to relying entirely on articles written for laymen or political talking points to formulate our opinions and understanding without engaging with the source material.

In my experience, whenever social media erupts with anger over something someone said, almost without exception, the outraged individuals have never read the offending comment or idea in its original context, whether it’s a court document, research paper, book, or hours-long interview. They simply echo the emotions expressed by the first person who interprets the comment. It’s no surprise that the model (our way of understanding ideas/data) would collapse if everyone followed this pattern. One person’s interpretation of the world is echoed by millions on social media.

In politics, the first conservative to interpret any particular comment will shape the opinions of all the Red states, and the first liberal to interpret it will shape the opinions of all the Blue states. In fact, “talking points” are designed to achieve this effect most efficiently. We are deliberately causing models—our ways of understanding the world—to collapse into a few dominant perspectives. This is a deliberate effort to eliminate the diversity of ideas.

In a two-party system like that of the US, this is a natural consequence because the party with greater diversity will always lose. Another factor is our reliance on emotions. We feel more secure and empowered when we agree with those around us. Holding a unique opinion can be anxiety-inducing. So, we are naturally wired for “model collapse.” This is the new way of “manufacturing consent,” discouraging people from checking the sources to form their opinions.

What the New York Times’ experiment reveals isn’t just the danger of AI but also the vulnerabilities of our own brains. AI simply allows us to simulate the phenomenon and see the consequences in tangible forms. It’s a lesson we need to apply to our own behavior.

Subscribe

I will email you when I post a new article.